Latency Reduction and Load Balancing Technologies

1. Latency Reduction:

What is network latency?

Network latency is the delay in network communication. It indicates the time it takes to transmit data over the network. Networks with high latency or significant delays will have high latency, whereas networks with quick response times will have low latency. Businesses aim for faster and lower latency network communication to improve productivity and enhance efficiency in their operations. Certain types of applications, such as fluid dynamics and many high-performance computing cases, require low network latency to meet computational demands. High network latency reduces application performance and increases the risk of application errors.

Why is latency important?

As more companies embark on digital transformation, they rely on cloud-based applications and services to execute core business activities. These operations also hinge on data collected from Internet-connected smart devices, collectively known as the Internet of Things. Delay caused by latency can lead to inefficiencies, especially in real-time activities reliant on sensor data. High latency diminishes the benefits of substantial investment in network bandwidth, impacting both user experience and customer satisfaction even as businesses deploy costly networks.

Which applications require low network latency?

All businesses want low latency, but it's even more important for certain industries and applications. The following are typical use cases.

Streaming analytics application

Streaming analytics applications, such as real-time auctions, online betting, and multiplayer games, use and analyze large amounts of real-time streaming data from many different sources. Users of the above applications rely on accurate, real-time information to make decisions. They want low-latency networks because delays can have financial consequences.

Real-time data management

Enterprise applications often consolidate and optimize data from various sources, for example from other software, transactional databases, the cloud, and sensors. These applications use change data capture (CDC) technology to collect and process changes in data in real time. Network latency issues can easily hinder the performance of these applications.

API Integration

Two separate computer systems communicate with each other using an application programming interface (API). There will be many times when system processing will stop until a response is received from the API. From there, network latency will cause many application performance problems. For example, an airline booking website would use an API call to get information about the number of available seats on a particular flight. Network latency may affect performance and cause this site to become unavailable. By the time the site receives a response from the API and restarts, someone else may have booked the ticket and you may have missed out.

Remote operation case has video function

Some workflows, such as drill presses, endoscope cameras, and search and rescue drones with video capabilities, require the operator to remotely control the machine using video. In these cases, high latency networks play a vital role in helping to avoid life-threatening consequences..

Reduce latency:

- Reducing latency ensures that data is transmitted from source to destination quickly, improving user experience and application efficiency.

- Load balancing distributes network traffic evenly across servers, prevents overload on a specific server, and optimizes resource usage.

- A smart algorithm for automatic network balancing is a software or device capable of analyzing and distributing network traffic in the most effective way.

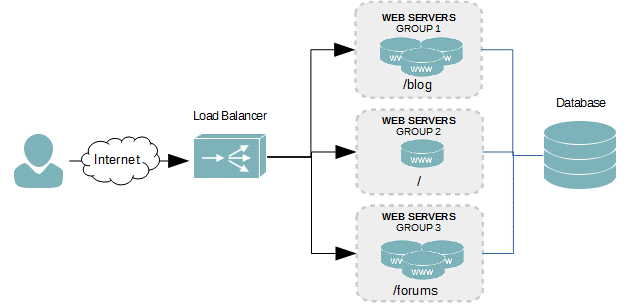

2. What is load balancing?

Load balancing is a method of distributing network traffic evenly across an area of resources supporting the application. Modern applications must handle millions of users simultaneously and accurately return text, video, images, and other data to each user quickly and reliably. To handle such high traffic, most applications own multiple resource servers, where data is replicated between servers. A load balancer is a device that sits between a user and a pool of servers and acts as a mediator, ensuring that all server resources are used equally.

What are the benefits of load balancing?

Load balancing directs and controls Internet traffic between application servers and their access objects or clients. Thereby improving application availability, scalability, security and performance.

Application readiness level

Server errors or maintenance can increase app downtime, making your app unavailable to visitors. Load balancers increase your system's fault tolerance by automatically detecting server problems and redirecting client traffic to available servers. You can use load balancing to make the following tasks easier:

- Run maintenance or upgrade your application's servers with zero application downtime

- Provides automated disaster recovery to backup sites

- Perform health checks and prevent problems that can cause downtime

Ability to scale applications

You can use a load balancer to intelligently direct network traffic between multiple servers. Your applications can handle thousands of client requests because load balancing does the following:

- Prevent traffic congestion at any server

- Predict application traffic so you can add or remove different servers, as needed

- Add redundancy to your system so you can scale with confidence

Application security

The load balancer comes with built-in security features to add another layer of security to your Internet applications. They are a useful tool for dealing with distributed denial of service attacks, in which attackers flood an application server with millions of concurrent requests, thereby causing server failure. A load balancer can also do the following:

- Monitor traffic and block harmful content

- Automatically redirect attack traffic to multiple backend servers to minimize impact

- Route traffic through a group of network firewalls for added security

Application performance

Load balancers improve application performance by increasing response time and reducing network latency. They perform several important tasks, such as:

- Distribute load evenly between servers to improve application performance

- Redirect client requests to a geographically closer server to reduce latency

- Ensure reliability and performance of physical and virtual computing resources

Load balancing:

- Modern load balancing solutions are often integrated into application delivery controllers (ADCs), providing scalability, security, and high performance for web-based applications and microservices.

- Load balancing not only improves performance but also enhances security by preventing distributed denial of service (DDoS) attacks and hiding internal network topology information.

- Automated intelligent load balancing algorithms can be used in cloud environments, data centers, and wireless sensor networks, where intelligent resource allocation is necessary to maintain performance and reliability.